Too Much Insulation

Posted on Tue 21 September 2010 in Rumination

In many ways the field of artificial life has been quite successful, even if its faddish popularity has died off somewhat in the last few years. However, artificial life systems have had only limited success in clearly exhibiting the capacity for open-ended evolution—the capacity to sustain an indefinite increase in organism complexity over time. Ten years ago, Bedaut et al. wrote that "a key challenge is whether digital systems based on symbolic logic harbor the same potential for evolutionary innovation as physical systems...Many believe that digital life today falls far short in this regard...," (2000 p. 369). This problem continues to be a topic of active discussion within the field.

My goal here is not so much a discussion of artificial life, per se, but to progressively zero in on a grounded approach to the analysis of situations at the symbolic level.

We'll next take a brief look at agent-based modeling, using NetLogo as our example.

Agent Based Modeling in NetLogo

NetLogo is an easy-to-use agent-based simulation package inspired by the Logo programming language. Designed as a "low floor, no ceiling" simulation package, it is widely used to give students and other novices hands-on experience with agent-based modeling.

A NetLogo simulation is made up of three basic agent primitives: mobile agents called turtles, stationary agents called patches that typically make up environments, and linking agents called links. Building a simulation entails defining agents of each type, and placing them together into a structured environment.

For demonstration purposes, I have written a simple NetLogo model that I include below (Java required). The objective of the simulation is to demonstrate, in a very naive fashion, that migration is a non-genetic means of adaptation. Each patch agent is assigned an environment-type, represented as integers. A patch is assigned a color on the basis of its environment-type. I have chosen to organize so that all patches of the same environment type occur in horizontal bands across the world. Each turtle agent has an environment preference. If the turtle is not located on a patch with the environment-type it prefers, it chooses a random direction in which to move. The probability that a turtle will move depending on its local patch's environment-type is an adjustable parameter. A chart tracks how (subjectively) suited the turtles are to their environmental circumstances over time.

Although NetLogo is a much simpler agent-based simulation package than packages like Swarm or Repast, it shares many points in common. In particular, the design of agent-based simulations typically proceeds in a top-down fashion by identifying the objects of interest, and then constructing software agents that model these. This will typically involve defining the various data (variables, parameters, etc.) making up each agent and each agents behavioral routines. If the package is a object-oriented one, then an agent class will define a set of data and a set of interface methods so that other objects may interact with instantiated members of that class.

Costs of an Encapsulated Approach to Modeling

This top-down approach to modeling is quite natural, and has very real benefits. This is especially true of object-oriented agent based platforms, since the modularity and encapsulation the object-oriented programming model provides naturally fits the encapsulated notion of agent at the heart of the agent-based modeling paradigm. Nonetheless, this top-down approach suffers some tangible drawbacks, the most important of which is it's ontological rigidity. Objects of one kind cannot transmutate into objects of another, except through tremendous effort on the part of the programmer. Environments and objects do not interpenetrate, except in mostly predefined ways, open-endedness, difficult to achieve. Agents do not emerge from the world's physics, but, topsy-turvy, define much of world's physics from the top down. Agents are the basement, and it is swept very clean.

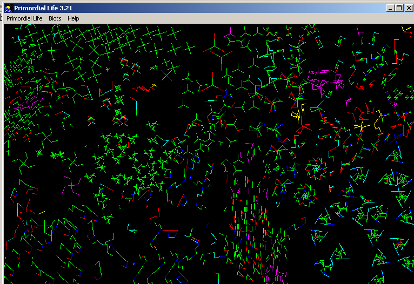

In some ways this can be seen most clearly in some artificial life simulations and some agent-based simulations of evolutionary processes. I happen to have enjoyed a little toy artificial life system, called Primordial Life for several years and I'm going to very unfairly pick on it because it exhibits the basic problem I am discussing in an obvious way.

Primordial Life builds all its organisms up out of parts that have fixed and predefined functions: the green segment does photosynthesis, the light blue one moves the organism, the red segment tries to feed off other organisms, and so on. An organism is composed of these parts arranged in particular geometric configurations. Thus, evolutionary processes operate on a space of possibilities defined by the possible arrangements of these functional parts. Since every component comes packaged with a set functionality, innovative reuse or re-adaptation of organism components is severely limited. The result is a set of organisms whose constitutional and environmental poverty hobbles any chance to achieve truly open-ended evolutionary freedom[1].

Admittedly I am picking on a toy artificial life system, not one that is actively used in the field. To be fair, artificial life simulations run the gamut in their approach. Tierra and Avida for example take a different approach to the one just described. Organisms are made up of primitive computer programs consisting of machine-like instructions competing for computer resources. Machine instructions include instructions to copy and reproduce. Since I haven't had the time to look at these systems carefully enough, I will leave any comment for the future. Nonetheless, many evolutionary simulations inside and outside of alife make use of various kinds of such functional primitives. Bedau et al. (2000 p. 368) write:

In contrast with popular theories of evolution, in which evolution is defined with respect to given symbolic genes and rules such as for replication or recombination, a proper theory of evolving life must allow evolution to create new symbolic descriptions and rules to manipulate them. In general, these entities are formed by an underlying physical dynamics, and then evolution sharpens and reinforces this symbolic level. How can such systems be modeled effectively where no state transition rules at the new symbolic level are specified in advance, in contrast with current modeling practice. One may try to mitigate this drawback by successively imposing additional constraints, but the challenge is to allow these dynamical constraints to emerge from the underlying dynamical system. This appears essential for modeling spontaneity or autonomy in life. In fact, there are two issues here: how physics can give rise to dynamical systems that can operate on the symbolic level, and how and why physical systems that are alive tend to expand and refine their symbolic platform. The first part of the challenge is to provide a theory explaining how dynamical systems can generate phenomena best understood as novel symbolic and rule-based behavior, that is, creating novel properties independent of detailed fluctuations in configuration. The second part is to specify under what conditions a natural discrete classification of dynamical states is recognized and reinforced by a dynamical system itself to structure its future evolution.

The reader may recall the importance Brian Cantwell Smith's placed on the world's flex and slop in the emergence of intentionality from the metaphysical flux. In Daniel Dennett's essay Things About Things Dennett argues that the noisiness of the world is a tremendous source of evolutionary innovation:

But let me make the point in a deeper and more general context. We have just seen an example of an important type of phenomenon: the elevation of a byproduct of an existing process into a functioning component of a more sophisticated process. This is one of the royal roads of evolution.(6) The traditional engineering perspective on all the supposed subsystems of the mind--the modules and other boxes--has been to suppose that their intercommunications (when they talk to each other in one way or another) were not noisy. That is, although there was plenty of designed intercommunication, there was no leakage. The models never supposed that one box might have imposed on it the ruckus caused by a nearby activity in another box. By this tidy assumption, all such models forego a tremendously important source of raw material for both learning and development. Or to put it in a slogan, such over-designed systems sweep away all opportunities for opportunism. What has heretofore been mere noise can be turned, on occasion, into signal. But if there is no noise--if the insulation between the chambers is too perfect--this can never happen. A good design principle to pursue, then, if you are trying to design a system that can improve itself indefinitely, is to equip all processes, at all levels, with "extraneous" byproducts. Let them make noises, cast shadows, or exude strange odors into the neighborhood; these broadcast effects willy-nilly carry information about the processes occurring inside. In nature, these broadcast byproducts come as a matter of course, and have to be positively shielded when they create too many problems; in the world of computer simulations, however, they are traditionally shunned--and would have to be willfully added as gratuitous excess effects, according to the common wisdom. But they provide the only sources of raw material for shaping into novel functionality. (Dennett 1998)

As a software developer I look upon the world's messiness with askance, as a problem to be minimized and controlled. Engineered systems frequently need to be clean, robust, and modular. As an information theorist, I find the flex and slop of the world something more than an inconvenience; instead it is a potential ally in a search for a richer and less brittle theory of information. As an anthropologist, I view it as an inevitable part of getting on with the business of life.

REFERENCES

Bedau M.A., McCaskill J.S. et al., "Open problems in artificial life", Artificial Life, 2000 Fall 6(4):363-76. http://authors.library.caltech.edu/13564/1/BEDal00.pdf

Dennett, Daniel "Things about Things," final draft for Lisbon Conference on Cognitive Science, May 1998. http://ase.tufts.edu/cogstud/papers/lisbon.htm

[1] This is not to say that no interesting results can be achieved. For example, the Primordial Life simulation can be quite fascinating to observe and speculate upon. One can watch as one design innovation displaces the other in an organic orgy of competitive interactions, watch cyclic fluctuations in populations, observe mass extinctions. And, I have seen some quite sophisticated organisms emerge (beware the novice who hooks up to the network!).